Apparently I have a habit of waking up at 4 am about once a week, checking my weather app and seeing it’s clear out — so I get my stuff together and take astro-photos. I enjoy that a lot, here is a nice pic of the moon:

Where faith and thought meet everyday life

Again woke up at 4am, as is my pattern now I check the weather app and it says “mostly clear” so, ok, why sleep. I can go out and at least line up the finder scope to the camera… All photos here were done without the tracker, on a tripod– 200mm focal length, and yeah… maybe this was dumb but the lens at F8…

Well there was a nice moon out and Jupiter and Saturn… sooo… I used my bahtinov mask to focus in on Jupiter (since it’s nice and bright) and snapped this pic of it… focus ok, over exposed Jupiter, but I get 3 moons:

Then, I get the focus and exposure better, but no moons (unless I push some sliders in lightroom)

Then I take a shot of Saturn… oh… it is pretty far away at 200mm it’s just a few pixels, but you can imagine it’s overall shape:

That’s greatly cropped, but if you click on it I hope you can see it 🙂

And now what you’ve been waiting for… here is a nice pretty well focused and exposed shot of the moon:

I told myself i would not get up in the wee hours of the night for photos — I really need sleep, my new plan was ok, just do solar stuff till we get into winter when it gets dark enough while I’m still awake…

So I’ve built a star tracker, and with a 40mm lens and only using an iPhone compass and bubble level to polar align it, I was able to get 30 second photos without star trails.

But I need to push the envelope. I want longer exposures and now have an external intervalometer to do that, and I also want to be able to use my 18-200mm lens at 200mm.

Initial tests with the 18-200mm lens, again with iPhone alignment, said — I get star trails.. So I set it up level in the house, and ran it for a while with the camera on and the iPhone level on it, making casual readings with a stop watch, and for sure, the lens was probably too heavy for that little stepper and an 8mm pitch lead screw.

So I got a 4mm pitch lead screw, it should be able to lift twice as much right? Got up at some insane time of night and took photos at 200mm and got star trails.

Ok, so there are at least two things that cause this… ok 3 things. One is that the longer the lens the more inaccuracies of the whole setup will show and cause star trails. Two is that if the polar alignment is out… you get star trails, three if the system clock of the microcontroller is inaccurate, you get star trails.

So to get a lot more precise in looking for causes, I did this:

I set the tracker up level on a tripod in my house. I put a big X on white background registration mark at the pivot, and at the end of the arm that swings. And I made a 30fps HD video of it for about 16 minutes.

That’s the data collection, now for processing I loaded the movie file into blender and used object tracking to track those two marks for the 16 minutes of the video.

Then I played all day with blender… ok but really then I wrote a python script that transforms the swing arm tracker’s position so that the pivot position is the origin — for each frame and printed to a file the angle of rotation of the swing arm tracker about the pivot origin.

Now by subtracting the start angle from the end angle, I had the total degrees (Yes I work in degrees not radians 🙂 swung in 16 minutes… it should have been so close to 4 degrees it’s not funny. But it actually came out to 3.75 ish degrees which is 6% slow.

That and a bad polar alignment probably explains star trails… since the stars are rotating slightly faster than the camera. For short exposures, it might not be noticeable, but I want long exposures, and I believe I can do it with this tracker with a little work.

Let see, does this data imply that I “should” see star trails in a 60 second exposure… We can figure this out… Photo Pills will give the max exposure time for an untracked photo with a given camera and lens combination. For my camera and that lens it says the NPF rule for it says max exposure of .94 seconds before a noticeable star trail. Ok, so 6% of 60 seconds is 3.6 seconds.. so I should see star trails due to the difference between .94 and 3.6, and Yes I did. Also I also took exposures at 30 seconds – which are 1.8 seconds slow… again that’s greater than .94 seconds, and yes I saw trails.

So the barn door tracker is basically a sidereal clock. At the root of it is it’s dependence on an accurate system time, since the amount of turns per degree for the lead screw depends on how wide the hinge is already open… Unlike a tracker with a curved screw, this tracker has a straight screw so the steps per degree needs to be variable.

Well the system clock on the ESP32 is based on a quartz crystal and so that in itself is very accurate, but there are a plethora of other places inaccuracies come in. Like we’re dealing with physical things all manufactured to their individual tolerances… the screw is a 4mm pitch screw, but not exactly 4mm. The stepper has so many steps per revolution, and that should be accurate, but how accurate? The trigonometry used in the program to compute the number of steps at time T, depends on the dimensions of the tracker, The designer used the dimensions in the design files, but what I have is something printed on my printer… My printer prints things to pretty accurate dimensions, but accurate enough? How far of from ideal?

So you could go through the whole system looking for sources of inaccuracies… and try to refine things, but there is another approach….

So in 16 minutes I measured a 6% discrepancy in angle… (ooh.. there’s also inaccuracies in my measurements too), how consistent is that? Well looking at the star trails in a 1 minute exposure gives me nice clean looking trails… if there was any speeding up and slowing down cycle, or just changes in speed the thickness of the trails would not be nice and uniform.

So on the assumption that the system all together is slow, what I can do is make a longer measurement. Set it up again, and video it for like 2 hours (that max I’d want to use the thing without resetting it)… and run that through my Blender code. From that I should be able to make a time dependent correction factor to the number of steps needed at any given point in time to bring it into correct timing.

It’s worth a try — alternatively I say — well this was fun— and spend thousands on a manufactured equatorial mount, and a guide scope… Thousands. Of. Dollars.

I think I’ll try the hardware hacking approach.

OK, so a few days back I posted about a couple clips I made so I could attach a red dot finder to both my camera and my Astra tracker.

See that entry for how I thought it should go, but how it actually went was I could not calibrate the scope. No matter how much I tried I got star trails even at 30 sec exposures, and the other night, just using an iPhone compass and level I was able to do 1 minute exposures with no trails.

So back to the drawing board. A person on thingiverse.com posted a shoe to attach the same red dot finder that I bought to the Astra. It has a lower profile and it looks like it will be far less wobbly than the one I made.

I do still need to adapt it to attach to my camera hot shoe, because I have no other way I can think about to calibrate the finder. It has 2 thumb screws one for fine adjust up/down and one for left/right.

To adjust to adjust those on the mount alone seems impossible.. You’d have to use the iphone to get a rough alignment, dial the scope to the north star, take a photo, see if it trails and then… what?… guess which way to move the mount and trial and error hunt for no trails?

No I figure if I attach it to the camera on a tripod, center a bright star in the camera view, which seems easy since there is a red box to center it in, dial in the finder, move the finder to the Astra mount, and well it should now be easy to point that at the north star.

It will take another sleepless, but clear, night to try again… I got up at 3:30am today, and clouds rolled in by 4:45, so also there’s not a lot of time to experiment in a cloudy region.

How would you describe yourself to someone who can’t see you?

I’m an entity with a spatial localization within a collection of quantum particles. My particles reflect photons, but you can’t sense them.

Well i have seemingly dozens of usb cables sitting around with every conceivable combinations of end connectors and lengths. I need them all, but not all at once. I’ve tried stuffing them in a drawer, but then they tangle and make it hard to find the one i need at the moment.

i can’t be the only one swimming in usb cables, how can i organize them?

Was today typical?

A typical day, waking up before 5am, figuring i can go outside and allign my red dot finder to my camera and star tracker mount by sighting some stars. But no. I lost the adaptor to attach the finder to my camera’s hot shoe.

So I printed another one, and by then it’s daylight, so I have to hope for clear skies tonight to try again.

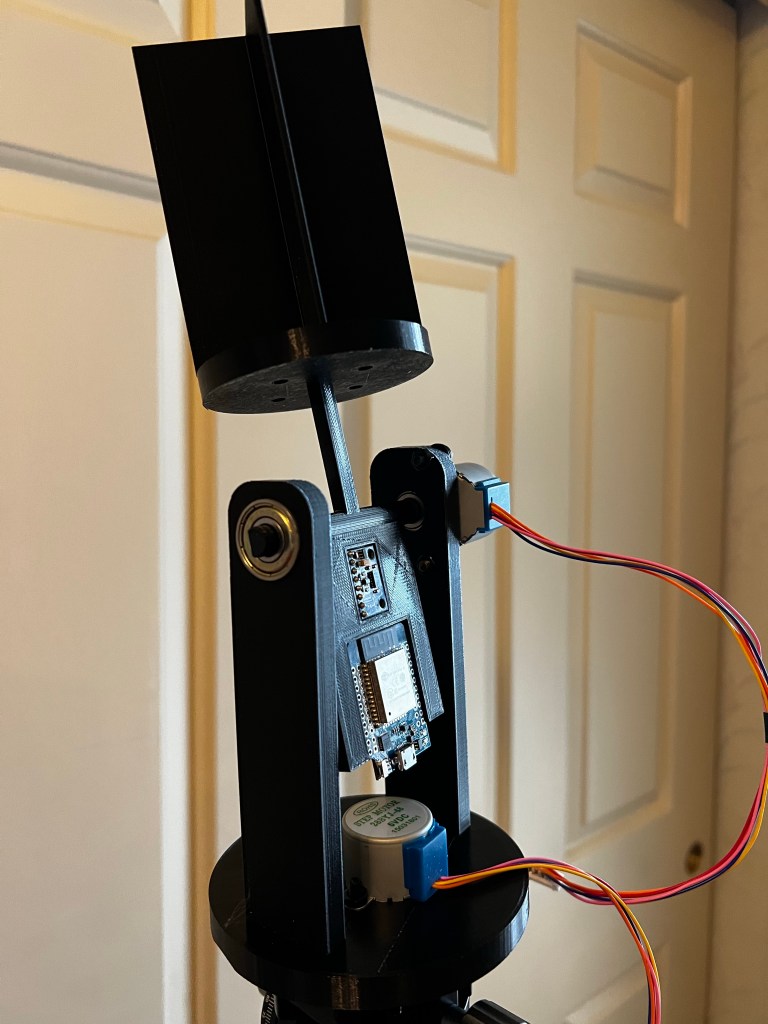

Well I made progress on the solar tracker. All 3D printing done. Mechanical assembly done.

Next, I need to solder pins onto the ESP32 and wire it up and figure out where the two stepper driver boards go.

As you can see the electronics fit into recesses in the tilting platform. the smaller module is the magnetometer /accelerometer.

In practice, the azimuth axis needs to spin about 370 degrees while the code does compass calibration. Since the wiring between the esp32 and the stepper move together on the axis that wiring won’t be a problem, but the usb cable feeding power and serial will have to have enough slack for the full rotation.

On the alt axis, the wiring to the stepper only needs enough slack for 90 degrees of tilt. the software will prevent over rotation. However, again, the usb cable might be a problem as you can see there is not a lot of clearance for the connector between it and the stepper.

Once the software is finalized, io to the tracker will be over wifi, so instead of a usb cable, I should be able to attach batteries and a voltage regulator, so then only two power lines will attach to the esp32, making cableing easier.

Well I’d start off by explaining it is a compound word of two words, “your” and “self”. “self” pertains to individual identities, and “your” is talking about a personal possession of the person in question. So now to describe “yourself”, it is a word pertaining to your personal identity– in essence “you”. I hope this clears up any questions.

A few weeks ago I decided to try my hand at selling stock photos on Adobe Stock. I have a Nikon D7000, and one thing I learned real quick was that though I can get nice close shots with my 18-200mm Nikon lens, the photos are not super sharp like you’d see with an actual macro lens.

So I purchased a Nikon Micro 40mm dx lens.. It’s one of Nikon’s suggested macro lenses for the D7000. This lens focuses real close, and though they say 40mm is on the short side for doing macro of things like insects that would get skittish with a lens getting close to them, I liked that it would also serve as an all around sharp lens.. And wow is it sharp. At least to my eyes.

The lens came when my camera body was in for factory service (I decided to get it all tuned up). And as soon as the camera came back I was out taking flower photos. Here is one I really like, a bee on a cone flower in our garden.

Now this is not a VR lens, so it takes a steady hand, good shutter speed, or a tripod. but that photo was one of the first ones I took with the lens and I did it hand held while the bee worked it’s way around the flower. I like the sharpness of the bee, but the depth of field is shallow, I like it that way, the only way to get it all in focus would be with a smaller F-stop, but hey.. the bee was moving too fast to experiment on the fly (pun intended).

Here are some more flower shots I did today. Again handheld and at like F8 or F11 and very fast shutter speed.

The reason I said wow in the title here is that as an all around lens it is also fantastic. I did a timer shot with the camera on a tripod, my wife standing exactly where I needed her, and I ran into the shot before the exposure. I’ll not post that photo here, but what I got was so hi-res that for the first time I need to consider processing to make portraits more flattering. In other words it captured everything about our faces in hi-def.. and so would actually need some softening.

All of these photos and our portrait photo were taken with autofocus, and they were all taken with the lens in infinity-.5feet mode, rather than full mode.

The bee photo was already approved by Adobe Stock and is available for purchase here. And a photo of just cone flowers is here.

I just uploaded today’s flower photos and unfortunately it will be some time before Adobe considers them due the the deluge of AI generated photos they are getting these days.

This blog entry looks like a product review, so I need to say, I have no material connection to Nikon, it just happens I bought their lens and like it so much I’m blogging about it.

Here is my Amazon affiliate link if you’d like to purchase the lens from Amazon, click the photo of the lens: